Lunch Locally

🚀 Déploiement¶

GLPI¶

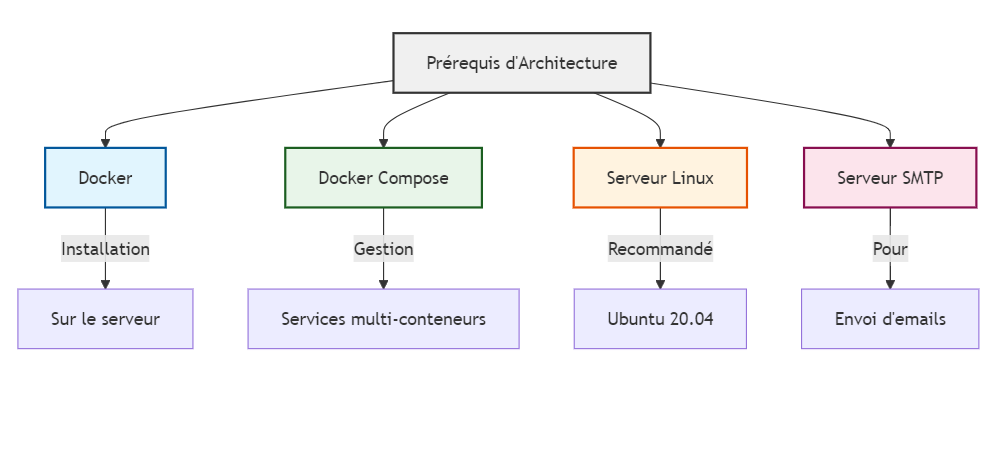

Prérequis¶

Détails des Prérequis¶

| Composant | Description | Version/Spécification |

|---|---|---|

| Docker | Plateforme de conteneurisation | Version récente et stable |

| Docker Compose | Outil de définition et de gestion d'applications multi-conteneurs | Compatible avec la version de Docker |

| Serveur | Système d'exploitation | Linux (Ubuntu 20.04 LTS recommandé) |

| Serveur SMTP | Service d'envoi d'emails | Configuré et accessible depuis le réseau du serveur |

Note importante : Assurez-vous que tous les composants sont à jour et correctement configurés avant de procéder à l'installation de GLPI.

📄 Configuration Docker Compose¶

Créez un fichier docker-compose.yml :

Voir le code

version: "3.2"

networks:

public:

external: true

glpi:

driver: overlay

attachable: true

services:

mysql:

image: mysql:8.0

hostname: mysql

command: --default-authentication-plugin=caching_sha2_password

env_file:

- ${GLPI_STACK_DIR}/.env

volumes:

- ${GLPI_VOLUMES_DIR}/glpidb:/var/lib/mysql

networks:

- glpi

glpi:

image: elestio/glpi:10.0.16

hostname: glpi

env_file:

- ${GLPI_STACK_DIR}/.env

ports:

- "8899:80"

volumes:

- /etc/timezone:/etc/timezone:ro

- /etc/localtime:/etc/localtime:ro

- ${GLPI_VOLUMES_DIR}/templates:/templates

- ${GLPI_VOLUMES_DIR}/glpidata:/var/www/html/glpi

- ${GLPI_VOLUMES_DIR}/glpiconfig/start.sh:/opt/start.sh

environment:

- TIMEZONE=Europe/Brussels

depends_on:

- mysql

deploy:

labels:

- "traefik.enable=true"

- "traefik.http.routers.glpi.rule=Host(`glpi.testing.kaisens.fr`)"

- "traefik.http.routers.glpi.entrypoints=websecure"

- "traefik.http.routers.glpi.service=glpi"

- "traefik.http.services.glpi.loadbalancer.server.port=80"

networks:

- glpi

- public

🚀 Étapes de Déploiement¶

- Créez le fichier

docker-compose.yml - Lancez les services :

docker-stack deploy -c docker-compose.yml glpi-svc

OCS Inventory¶

📄 Configuration Docker Compose¶

Créez un fichier docker-compose.yml pour OCS Inventory :

Voir le code

version: '3'

networks:

public:

external: true

glpi_glpi:

external: true

services:

ocsapplication:

image: ocsinventory/ocsinventory-docker-image:2.12.2

env_file:

- ${OCSINVENTORY_STACK_DIR}/.env

ports:

- 1580:80

volumes:

- ${OCSINVENTORY_VOLUMES_DIR}/perlcomdata:/etc/ocsinventory-server

- ${OCSINVENTORY_VOLUMES_DIR}/ocsreportsdata:/usr/share/ocsinventory-reports/ocsreports/extensions

- ${OCSINVENTORY_VOLUMES_DIR}/varlibdata:/var/lib/ocsinventory-reports

- ${OCSINVENTORY_VOLUMES_DIR}/httpdconfdata:/etc/apache2/conf-available

- ${OCSINVENTORY_VOLUMES_DIR}/profilesconfdata:/usr/share/ocsinventory-reports/ocsreports/config/profiles

environment:

OCS_DB_USER: ${MYSQL_USER}

OCS_DB_PASS: ${MYSQL_PASSWORD}

OCS_DB_NAME: ${MYSQL_DATABASE}

OCS_SSL_ENABLED: 0

OCS_DBI_PRINT_ERROR: 0

depends_on:

- ocsdb

deploy:

labels:

- traefik.enable=true

- traefik.http.routers.ocsapplication.rule=Host(`ocsinventory.testing.kaisens.fr`)

- traefik.http.routers.ocsapplication.entrypoints=websecure

- traefik.http.routers.ocsapplication.service=ocsapplication

- traefik.http.services.ocsapplication.loadbalancer.server.port=80

networks:

- public

- glpi_glpi

ocsdb:

image: mysql:8.0

env_file:

- ${OCSINVENTORY_STACK_DIR}/.env

volumes:

- ${OCSINVENTORY_VOLUMES_DIR}/sql/:/docker-entrypoint-initdb.d/

- ${OCSINVENTORY_VOLUMES_DIR}/sqldata:/var/lib/mysql

networks:

- glpi_glpi

Application Flask¶

📄 Configuration Docker Compose¶

Créez un fichier docker-compose.yml pour l'application Flask :

Voir le code

version: '3.8'

services:

flask-app:

build: .

container_name: flask_app

ports:

- "5000:5000"

environment:

- FLASK_ENV=development

volumes:

- .:/apps/logs

networks:

- app-network

networks:

app-network:

driver: bridge

🐳 Dockerfile¶

Créez un fichier Dockerfile pour l'application Flask :

Voir le code

ARG IMAGE_BASE_URL="releases.registry.docker.kaisens.fr"

ARG PYTHON_IMAGE_VERSION="3.10-4"

ARG PYTHON_BUILD_IMAGE_VERSION="${PYTHON_IMAGE_VERSION}-3"

ARG BUILD_ENVIRONMENT="prod"

ARG TMP_SETUP_DIRPATH="/tmp/src"

ARG VENV_DIRPATH="/opt/ticketing-synchronizer/venv"

ARG APP_HOME="/app"

FROM ${IMAGE_BASE_URL}/kaisensdata/devops/docker-images/python-build:${PYTHON_BUILD_IMAGE_VERSION} as builder

ARG VENV_DIRPATH

ARG TMP_SETUP_DIRPATH

ARG BUILD_ENVIRONMENT

USER root

RUN virtualenv "${VENV_DIRPATH}"

COPY requirements/ "${TMP_SETUP_DIRPATH}/requirements"

RUN "${VENV_DIRPATH}/bin/pip" install --no-cache-dir -r "${TMP_SETUP_DIRPATH}/requirements/${BUILD_ENVIRONMENT}" && \

"${VENV_DIRPATH}/bin/pip" install Pillow

USER "${OPS_USER}"

FROM ${IMAGE_BASE_URL}/kaisensdata/devops/docker-images/python:${PYTHON_IMAGE_VERSION} as run

ARG APP_HOME

ARG VENV_DIRPATH

ARG BUILD_ENVIRONMENT

USER root

COPY --from=builder --chown="${OPS_USER}:${OPS_USER}" "${VENV_DIRPATH}" "${VENV_DIRPATH}"

ENV PATH="${VENV_DIRPATH}/bin:${PATH}"

ENV FLASK_APP=app.py

COPY --chown="${OPS_USER}:${OPS_USER}" . "${APP_HOME}"

RUN chown "${OPS_USER}:${OPS_USER}" "${APP_HOME}"

USER "${OPS_USER}"

WORKDIR "${APP_HOME}"

EXPOSE 5000

CMD ["flask", "run", "--host=0.0.0.0"]

💻 Fichier app.py¶

Voir le code

import logging

import json

import os

import smtplib

import requests

from email.mime.text import MIMEText

from flask import Flask, request, jsonify

from email.mime.multipart import MIMEMultipart

email_host = 'ssl0.ovh.net'

email_port = 465

email_user = 'moodle@kaischool.fr'

email_password = 'Moodle.2023!'

app = Flask(__name__)

# Configuration des variables d'environnement pour GLPI

glpi_restapi_url = os.getenv('GLPI_RESTAPI_URL' , 'https://glpi.testing.kaisens.fr/apirest.php')

glpi_url_init = os.getenv('GLPI_URL_INIT', 'http://192.168.56.7:8091/apirest.php/initSession')

glpi_url_ticket = os.getenv('GLPI_URL_TICKET', 'http://192.168.56.7:8091/apirest.php/Ticket')

username = os.getenv('GLPI_USERNAME', 'glpi')

password = os.getenv('GLPI_PASSWORD', 'glpi')

app_token = os.getenv('GLPI_APP_TOKEN', 'wZlcazGYkDzWTs3nXgtBOuV6Al9qQbASwxpidwmL')

user_token = os.getenv('GLPI_USER_TOKEN', 'P2XcgOwXzSgauIc8VR6HPnb11Zl5qVFRvw7FuRpz')

glpi_url_category = os.getenv('GLPI_URL_CATEGORY', 'http://192.168.56.7:8091/apirest.php/ITILCategory')

glpi_url_groups = os.getenv('GLPI_URL_GROUPS', 'http://192.168.56.7:8091/apirest.php/group')

# Configuration des variables d'environnement pour Redmine

redmine_url = os.getenv('REDMINE_URL', 'http://192.168.56.7:8082')

redmine_api_key = os.getenv('REDMINE_API_KEY', '12965c718c99fbb91b40c3a3f0793f6dac980243')

redmine_project_name = os.getenv('REDMINE_PROJECT_NAME', 'devops')

# Base URL pour les tickets GLPI

glpi_ticket_url_base = os.getenv('GLPI_TICKET_URL_BASE', 'http://192.168.56.7:8091/front/ticket.form.php?id=')

# Configuration du logging

logging.basicConfig(filename=os.getenv('LOG_FILE', '/apps/logs/ticket_creation_errors.log'), level=logging.ERROR,

format='%(asctime)s - %(levelname)s - %(message)s')

# Stocker les tickets GLPI créés

glpi_tickets = {}

def get_category_id_by_name(category_name):

try:

# Initier la session

session_response = requests.post(glpi_url_init, headers={

'Authorization': f'user_token {user_token}',

'App-Token': app_token

}, json={'login': username, 'password': password})

session_response.raise_for_status()

session_token = session_response.json().get('session_token')

if not session_token:

raise ValueError("Session token not received from GLPI")

# Récupérer les catégories

response = requests.get(glpi_url_category, headers={

'Session-Token': session_token,

'App-Token': app_token

})

response.raise_for_status()

categories = response.json()

# Trouver l'ID de la catégorie

for category in categories:

if category['name'].lower() == category_name.lower():

return category['id']

raise ValueError(f"Category '{category_name}' not found")

except requests.exceptions.RequestException as e:

logging.error("Échec de la récupération des catégories : %s", str(e))

raise

def get_bug_tracker_id():

try:

response = requests.get(f"{redmine_url}/trackers.json", headers={

'X-Redmine-API-Key': redmine_api_key

})

response.raise_for_status()

trackers = response.json()['trackers']

for tracker in trackers:

if tracker['name'].lower() == 'bug':

return tracker['id']

raise ValueError("Tracker 'bug' not found")

except requests.exceptions.RequestException as e:

logging.error("Échec de la récupération des trackers : %s", str(e))

raise

def create_glpi_ticket(alert):

try:

# Initiate session with GLPI

session_response = requests.post(glpi_url_init, headers={

'Authorization': f'user_token {user_token}',

'App-Token': app_token

}, json={'login': username, 'password': password})

session_response.raise_for_status()

session_token = session_response.json().get('session_token')

if not session_token:

raise ValueError("Session token not received from GLPI")

# Create the GLPI ticket

ticket_title = f"ALERTE - {alert['labels'].get('alertname', 'Inconnu')} - {alert['labels'].get('instance', 'Inconnu')}"

ticket_content = f"{alert['annotations'].get('summary', 'No details available')}"

# Get the category ID for 'supervision'

category_id = get_category_id_by_name("supervision")

ticket_data = {

'input': {

'name': ticket_title,

'content': ticket_content,

'itilcategories_id': category_id # Assign the category here

}

}

response = requests.post(glpi_url_ticket, json=ticket_data, headers={

'Session-Token': session_token,

'App-Token': app_token

})

response.raise_for_status()

ticket_id = response.json()['id']

glpi_ticket_url = f"{glpi_ticket_url_base}{ticket_id}"

# Store ticket data

glpi_tickets[ticket_id] = {'url': glpi_ticket_url, 'alert': alert}

# Check and create N1 group if it doesn't exist, then assign the ticket

try:

n1_group_id = check_and_create_group("N2")

assign_ticket_to_group(ticket_id, n1_group_id)

except Exception as e:

logging.error(f"Failed to assign ticket {ticket_id} to N1 group: {str(e)}")

# Check and create N2 group if it doesn't exist, then assign as requester

try:

n2_group_id = check_and_create_group("N1")

assign_ticket_to_requester(ticket_id, n2_group_id)

except Exception as e:

logging.error(f"Failed to assign ticket {ticket_id} to N2 group as requester: {str(e)}")

send_email(glpi_ticket_url=glpi_ticket_url, ticket_title=ticket_title, ticket_content=ticket_content)

return ticket_id, glpi_ticket_url

except requests.exceptions.RequestException as e:

logging.error("Échec de la création du ticket GLPI : %s", str(e))

raise

def send_email(glpi_ticket_url, ticket_title, ticket_content):

try:

email_host = 'ssl0.ovh.net'

email_port = 465

email_user = 'moodle@kaischool.fr'

email_password = 'Moodle.2023!'

sender_email = "supervision@kaisens.fr"

recipient_email = "ckanzari@smarte-conseil.fr"

# Créer le message

msg = MIMEMultipart()

msg['From'] = sender_email

msg['To'] = recipient_email

msg['Subject'] = "ALERTE DE SUPERVISION : Vérifiez vos métriques"

# Corps du message

body = (

"Bonjour,\n\n"

"Une alerte de supervision a été générée en raison de problèmes détectés dans les métriques de votre système.\n\n"

"Détails de l'alerte :\n"

f"- **Titre du ticket** : {ticket_title}\n"

f"- **Contenu du ticket** : {ticket_content}\n\n"

"Veuillez vérifier les métriques suivantes et prendre les mesures appropriées pour résoudre les problèmes détectés.\n\n"

f"URL du ticket GLPI : {glpi_ticket_url}\n\n"

"Merci de votre attention.\n\n"

"Cordialement,\n"

"L'équipe de supervision"

)

msg.attach(MIMEText(body, 'plain'))

# Connexion au serveur SMTP et envoi de l'email

with smtplib.SMTP_SSL(email_host, email_port) as server:

server.login(email_user, email_password)

server.sendmail(sender_email, recipient_email, msg.as_string())

logging.info("Alert please check your metrics")

except Exception as e:

logging.error(f"Échec de l'envoi de l'email : {str(e)}")

send_email(

glpi_ticket_url="https://glpi.testing.kaisens.fr/front/ticket.php?id=123",

ticket_title="ALERTE - Problème de performance",

ticket_content="Un de vos metrics a touché le threshold. Veuillez vérifier immédiatement."

)

def create_redmine_ticket(glpi_ticket_id):

try:

alert = glpi_tickets.get(glpi_ticket_id)

if not alert:

raise ValueError("Ticket GLPI non trouvé")

glpi_ticket_url = alert['url']

bug_tracker_id = get_bug_tracker_id()

issue_subject = f"ALERTE - {alert['labels'].get('alertname', 'Inconnu')} - GLPI Ticket #{glpi_ticket_id}"

issue_description = f"{alert['annotations'].get('summary', 'No details available')}\n\nURL du ticket GLPI : {glpi_ticket_url}"

issue_data = {

'issue': {

'project_id': redmine_project_name,

'tracker_id': bug_tracker_id,

'subject': issue_subject,

'description': issue_description

}

}

response = requests.post(f"{redmine_url}/issues.json", json=issue_data, headers={

'X-Redmine-API-Key': redmine_api_key

})

response.raise_for_status()

return response.json()['issue']['id']

except requests.exceptions.RequestException as e:

logging.error("Échec de la création du ticket Redmine : %s", str(e))

raise

def get_ticket_by_id(id):

try:

# Fetch GLPI ticket details

session_response = requests.post(glpi_url_init, headers={

'Authorization': f'user_token {user_token}',

'App-Token': app_token

}, json={'login': username, 'password': password})

session_response.raise_for_status()

session_token = session_response.json().get('session_token')

if not session_token:

raise ValueError("Session token not received from GLPI")

glpi_response = requests.get(f"{glpi_url_ticket}/{id}", headers={

'Session-Token': session_token,

'App-Token': app_token

})

glpi_response.raise_for_status()

glpi_ticket = glpi_response.json()

logging.info("Détails du ticket GLPI récupérés : %s", json.dumps(glpi_ticket, indent=4))

return glpi_ticket

except requests.exceptions.RequestException as e:

logging.error("Échec de la récupération des détails du ticket GLPI : %s", str(e))

raise

except ValueError as e:

logging.error("Erreur de traitement des données : %s", str(e))

raise

except Exception as e:

logging.error("Erreur inattendue : %s", str(e))

raise

def get_glpi_categories():

try:

session_response = requests.post(glpi_url_init, headers={

'Authorization': f'user_token {user_token}',

'App-Token': app_token

}, json={'login': username, 'password': password})

session_response.raise_for_status()

session_token = session_response.json().get('session_token')

if not session_token:

raise ValueError("Session token not received from GLPI")

response = requests.get(f"{glpi_url_category}/", headers={

'Session-Token': session_token,

'App-Token': app_token

})

response.raise_for_status()

categories = response.json()

category_names = [category['name'] for category in categories]

logging.info("Noms des catégories GLPI récupérés : %s", json.dumps(category_names, indent=4))

return category_names

except requests.exceptions.RequestException as e:

logging.error("Échec de la récupération des catégories GLPI : %s", str(e))

raise

except ValueError as e:

logging.error("Erreur de traitement des données : %s", str(e))

raise

except Exception as e:

logging.error("Erreur inattendue : %s", str(e))

raise

def get_session_token():

try:

session_response = requests.post(glpi_url_init, headers={

'Authorization': f'user_token {user_token}',

'App-Token': app_token

}, json={'login': username, 'password': password})

session_response.raise_for_status()

session_token = session_response.json().get('session_token')

if not session_token:

raise ValueError("Session token not received from GLPI")

return session_token

except requests.exceptions.RequestException as e:

logging.error(f"Failed to get session token: {str(e)}")

raise

def get_group_id_by_name(group_name):

try:

session_token = get_session_token()

logging.info(f"Session token retrieved: {session_token}")

response = requests.get(glpi_url_groups, headers={

'Session-Token': session_token,

'App-Token': app_token

})

response.raise_for_status()

groups = response.json()

logging.info(f"Groups retrieved: {groups}")

for group in groups:

if group['name'].lower() == group_name.lower():

return group['id']

raise ValueError(f"Group '{group_name}' not found")

except requests.exceptions.RequestException as e:

logging.error(f"Failed to retrieve groups: {str(e)}")

raise

except ValueError as ve:

logging.error(f"Value error: {str(ve)}")

raise

def assign_ticket_to_group(ticket_id, group_id):

try:

session_token = get_session_token()

url = f"{glpi_url_ticket}/{ticket_id}/Group_Ticket/"

headers = {

"Content-Type": "application/json",

"App-Token": app_token,

"Session-Token": session_token

}

data = {

"input": {

"tickets_id": ticket_id,

"groups_id": group_id,

"type": 2 # 2 corresponds to "Assigned" type

}

}

response = requests.post(url, headers=headers, json=data)

response.raise_for_status() # This will raise an exception for HTTP errors

return response.json()

except requests.exceptions.RequestException as e:

logging.error(f"Failed to assign ticket to group: {str(e)}")

raise

def assign_ticket_to_requester(ticket_id, group_id):

try:

session_token = get_session_token()

url = f"{glpi_url_ticket}/{ticket_id}/Group_Ticket/"

headers = {

"Content-Type": "application/json",

"App-Token": app_token,

"Session-Token": session_token

}

data = {

"input": {

"tickets_id": ticket_id,

"groups_id": group_id,

"type": 1 # 2 corresponds to "Assigned" type

}

}

response = requests.post(url, headers=headers, json=data)

response.raise_for_status() # This will raise an exception for HTTP errors

return response.json()

except requests.exceptions.RequestException as e:

logging.error(f"Failed to assign ticket to group: {str(e)}")

raise

@app.route('/ticket/<int:ticket_id>', methods=['GET'])

def get_ticket(ticket_id):

ticket_info = get_ticket_by_id(ticket_id)

if ticket_info:

return jsonify(ticket_info)

else:

return jsonify({"message": "Erreur lors de la récupération du ticket"}), 400

@app.route('/api/send_email', methods=['POST'])

def api_send_email():

try:

data = request.json

glpi_ticket_url = data.get('glpi_ticket_url')

ticket_title = data.get('ticket_title')

ticket_content = data.get('ticket_content')

if not all([glpi_ticket_url, ticket_title, ticket_content]):

return jsonify({'error': 'Missing required fields'}), 400

send_email(glpi_ticket_url, ticket_title, ticket_content)

return jsonify({'message': 'Email sent successfully'}), 200

except Exception as e:

logging.error(f"Failed to send email: {str(e)}")

return jsonify({'error': 'Failed to send email', 'details': str(e)}), 500

def check_and_create_group(group_name):

try:

session_token = get_session_token()

# Check if the group exists

response = requests.get(glpi_url_groups, headers={

'Session-Token': session_token,

'App-Token': app_token

})

response.raise_for_status()

groups = response.json()

for group in groups:

if group['name'].lower() == group_name.lower():

logging.info(f"Group '{group_name}' already exists with ID: {group['id']}")

return group['id']

# If the group doesn't exist, create it

create_group_data = {

'input': {

'name': group_name

}

}

create_response = requests.post(glpi_url_groups,

headers={

'Session-Token': session_token,

'App-Token': app_token,

'Content-Type': 'application/json'

},

json=create_group_data)

create_response.raise_for_status()

new_group_id = create_response.json()['id']

logging.info(f"Group '{group_name}' created successfully with ID: {new_group_id}")

return new_group_id

except requests.exceptions.RequestException as e:

logging.error(f"Failed to check/create group '{group_name}': {str(e)}")

raise

except Exception as e:

logging.error(f"Unexpected error while checking/creating group '{group_name}': {str(e)}")

raise

try:

n1_group_id = check_and_create_group("N2")

logging.info(f"N1 group ID: {n1_group_id}")

except Exception as e:

logging.error(f"Error handling N1 group: {str(e)}")

try:

n2_group_id = check_and_create_group("N1")

logging.info(f"N2 group ID: {n2_group_id}")

except Exception as e:

logging.error(f"Error handling N2 group: {str(e)}")

@app.route('/webhook', methods=['POST'])

def webhook():

data = request.json

session_token = get_session_token()

for alert in data['alerts']:

try:

glpi_ticket_id, glpi_ticket_url = create_glpi_ticket(alert)

computer_name = alert.get('labels').get('hostname')

if computer_name:

computer_id = get_computer_id_by_name(computer_name,session_token)

if computer_id:

logging.info(f"ids found for computer {computer_name} : {computer_id}")

assign_ticket_to_computer(COMPUTER_ID=computer_id[0],TICKET_ID=glpi_ticket_id,session_token=session_token)

except Exception as e:

logging.error("Erreur lors du traitement de l'alerte : %s", str(e))

return jsonify({'status': 'error', 'message': str(e)}), 500

return jsonify({'status': 'Tickets created in GLPI'})

def get_ticket_by_id(id):

try:

# Fetch GLPI ticket details

session_response = requests.post(glpi_url_init, headers={

'Authorization': f'user_token {user_token}',

'App-Token': app_token

}, json={'login': username, 'password': password})

session_response.raise_for_status()

session_token = session_response.json().get('session_token')

if not session_token:

raise ValueError("Session token not received from GLPI")

glpi_response = requests.get(f"{glpi_url_ticket}/{id}", headers={

'Session-Token': session_token,

'App-Token': app_token

})

glpi_response.raise_for_status()

glpi_ticket = glpi_response.json()

# Debug: Log the entire GLPI ticket response

logging.info("Réponse complète du ticket GLPI : %s", json.dumps(glpi_ticket, indent=4))

# Filter the ticket details to keep only 'name' and 'content' (description)

filtered_ticket = {

'name': glpi_ticket.get('name', 'N/A'),

'description': glpi_ticket.get('content', 'N/A')

}

# Prepare log message with only filtered ticket information

log_message = {

'glpi_ticket': filtered_ticket

}

# Log the filtered GLPI ticket details

logging.info("Détails du ticket GLPI récupérés : %s", json.dumps(log_message, indent=4))

return (filtered_ticket)

except requests.exceptions.RequestException as e:

logging.error("Échec de la récupération des détails du ticket GLPI : %s", str(e))

return jsonify({'status': 'error', 'message': str(e)}), 500

except ValueError as e:

logging.error("Erreur de traitement des données : %s", str(e))

return jsonify({'status': 'error', 'message': str(e)}), 500

except Exception as e:

logging.error("Erreur inattendue : %s", str(e))

return jsonify({'status': 'error', 'message': str(e)}), 500

@app.route('/redmine/accept/<int:id>', methods=['GET', 'POST'])

def accept_redmine_ticket(id):

try:

# Fetch GLPI ticket details

glpi_ticket_response = get_ticket_by_id(id)

if isinstance(glpi_ticket_response, dict): # Ensure it's a dictionary

ticket_name = glpi_ticket_response.get('name', 'N/A')

ticket_description = glpi_ticket_response.get('description', 'N/A')

bug_tracker_id = get_bug_tracker_id()

redmine_ticket_payload = {

'issue': {

'project_id': redmine_project_name, # Assurez-vous que c'est le bon ID de projet

'subject': ticket_name,

'description': ticket_description,

'tracker_id': bug_tracker_id

}

}

redmine_response = requests.post(f"{redmine_url}/issues.json", headers={

'X-Redmine-API-Key': redmine_api_key,

'Content-Type': 'application/json'

}, json=redmine_ticket_payload)

redmine_response.raise_for_status()

redmine_ticket = redmine_response.json()

response_js = f"""

<html>

<head>

<script src="https://cdn.jsdelivr.net/npm/sweetalert2@11"></script>

</head>

<body>

<script>

Swal.fire({{

title: 'Succès!',

text: 'Ticket créé avec succès sur Redmine!\\nID du ticket Redmine: {redmine_ticket['issue']['id']}',

icon: 'success',

confirmButtonText: 'OK'

}}).then(function() {{

window.history.back()

}});

</script>

</body>

</html>

"""

return response_js

else:

raise ValueError("Échec de la récupération du ticket GLPI")

except requests.exceptions.RequestException as e:

logging.error("Échec de la création du ticket Redmine : %s", str(e))

return jsonify({'status': 'error', 'message': str(e)}), 500

except ValueError as e:

logging.error("Erreur de traitement des données : %s", str(e))

return jsonify({'status': 'error', 'message': str(e)}), 500

except Exception as e:

logging.error("Erreur inattendue : %s", str(e))

return jsonify({'status': 'error', 'message': str(e)}), 500

@app.route('/health', methods=['GET'])

def health():

return jsonify({'status': 'OK'}), 200

def get_computer_id_by_name(computer_name,session_token):

params = {

'criteria[0][field]': 1,

'criteria[0][searchtype]': 'contains',

'criteria[0][value]': computer_name,

}

try:

response = requests.get(f"{glpi_restapi_url}/search/Computer?forcedisplay[0]=2", headers={

'App-Token': app_token,

'session-token': session_token

}, params=params)

response.raise_for_status()

COMPUTERS = response.json()

logging.error(COMPUTERS)

if COMPUTERS['totalcount'] == 1:

return [COMPUTERS['data'][0]['2']]

elif COMPUTERS['totalcount'] > 1:

return [COMPUTER['2'] for COMPUTER in COMPUTERS['data']]

logging.info(f"No computer found with the name: {computer_name}")

return None

except requests.exceptions.HTTPError as http_err:

logging.error(f"HTTP error occurred: {http_err}")

raise

except Exception as e:

logging.ERROR(f"unable to get computer id for computer {computer_name} : {str(e)}")

raise

def assign_ticket_to_computer(COMPUTER_ID, TICKET_ID, session_token):

payload = {

'input': {

'tickets_id': TICKET_ID,

'itemtype': "Computer",

'items_id': COMPUTER_ID,

}

}

try:

response = requests.post(

f"{glpi_restapi_url}/Ticket/{TICKET_ID}/Item_Ticket",

headers={

'App-Token': app_token,

'session-token': session_token,

'Content-Type': 'application/json'

},

json=payload

)

# Check if the response is successful (status code 2xx)

if response.status_code == 201:

logging.info(f"Ticket {TICKET_ID} successfully assigned to computer {COMPUTER_ID}.")

return response.json() # Return the actual response if successful

else:

logging.info(f"Failed to assign ticket. Status code: {response.status_code}")

return None

except requests.exceptions.RequestException as e:

logging.error(f"An error occurred: {e}")

return None

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)

🧪 Tests¶

🐳 Déploiement avec Docker Swarm¶

# Initialiser Docker Swarm

docker swarm init

# Déployer la stack

docker stack deploy -c docker-compose.yml ticketing-synchronizer

# Vérifier les services

docker stack services ticketing-synchronizer

🔍 Test avec curl¶

Voir la commande

curl -X POST http://142.44.212.148:5000/webhook \

-H "Content-Type: application/json" \

-d '{

"labels": {

"alertname": "CPU_Usage_High",

"instance": "server01"

},

"annotations": {

"summary": "CPU usage has exceeded 90% on server01."

}

}'